Estimated read time: 3 minutes

Many years ago I made an awful bash script which subscribed to a couple of RSS feeds and pushed notifications to me if it found keywords of value in the title. In the event doing some changes, I made the jump to python. Here is the gist of it, where you easily can substitute the choice of database and mobile push service. I use pushover (which cost a couple of dollars, but well worth it) and sqlite3.

#!/usr/bin/python3

import feedparser # pip3 install

import http.client

import os

import re

import sqlite3

import sys

import urllib

sqlite_db_file = '/home/user/.rss-reader.db'

pushover_token = 'xxx'

pushover_userkey = 'xxx'

feeds = [

{

'name': 'Lokalavis 1',

'url': 'http://lokalavis.no/rss.xml',

'keywords': ['.']

},

{

'name': 'Aftenbladet',

'url': 'http://www.aftenbladet.no/rss',

'keywords': [

'Ryfylke',

'Lokalt sted 1',

'Lokalt sted 2',

'fly(plass|krasj|ulykke)',

'ryfast',

'skogbrann'

]

},

{

'name': 'Reddit Frontpage',

'url': 'https://www.reddit.com/.rss',

'keywords': ['norw(ay|egian)']},

{

'name': 'Reddit Linux',

'url': 'https://www.reddit.com/r/linux.rss',

'keywords': ['debian', 'vim', 'lwn', 'stallman']

},

{

'name': 'HackerNews',

'url': 'https://hnrss.org/frontpage',

'keywords': [

'postgres',

'norw(ay|egian)',

'debian',

'

]

}

]

db = sqlite3.connect(sqlite_db_file)

c = db.cursor()

create_table = '''CREATE TABLE IF NOT EXISTS entries (

id INTEGER PRIMARY KEY AUTOINCREMENT,

url TEXT,

summary TEXT

)'''

c.execute(create_table)

db.commit()

def tty():

return os.isatty(sys.stdin.fileno())

def save_in_db(url, summary):

c = db.cursor()

insert = "INSERT INTO entries VALUES (NULL, ?, ?);"

c.execute(insert, [url, summary])

db.commit()

def link_in_db(link):

c = db.cursor()

c.execute("SELECT * FROM entries where url = ?", [link])

if len(c.fetchall()) > 0:

return True

return False

def push(title, summary, link):

conn = http.client.HTTPSConnection("api.pushover.net:443")

conn.request("POST", "/1/messages.json",

urllib.parse.urlencode({

"token": pushover_token,

"user": pushover_userkey,

"title": title,

"message": summary,

"url": link

}), { "Content-type": "application/x-www-form-urlencoded" })

conn.getresponse()

for feed in feeds:

if tty(): print("Fetching {}".format(feed['url']))

p = feedparser.parse(feed['url'])

for entry in p.entries:

link = entry.link

summary = entry.summary

for keyword in feed['keywords']:

if re.search(keyword, entry.summary) and not link_in_db(link):

if tty(): print("Keyword hit '{}' in {}".format(keyword, entry.title))

push_title = "{} ({})".format(feed['name'], keyword)

title_and_summary = "{} - {}".format(entry.title, entry.summary)

push(push_title, title_and_summary, link)

save_in_db(link, title_and_summary)

Add to cron:

*/10 * * * * /home/user/bin/rss-reader.py

Profit!

I have added the field summary in my database for possible later use.

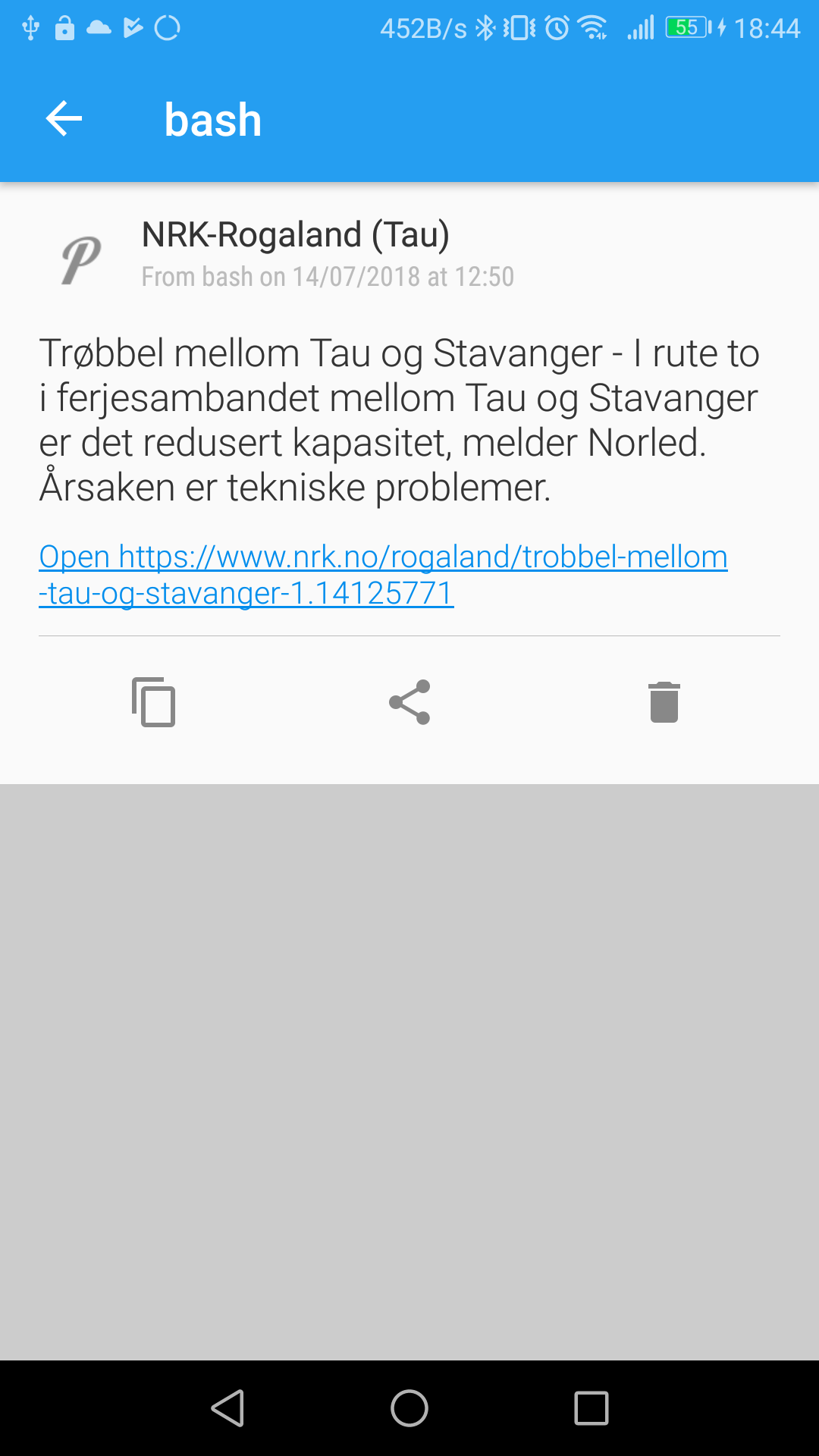

Here is a screenshot of the Pushover telling me the local ferry is having trouble. (-: